Categories

Money money money...

Could we help you? Please click the banners. We are young and desperately need the money

Last updated: September 10th 2024

Categories: Linux

Author: Marcus Fleuti

Simple PostgreSQL compressed database backups for Linux Admins with backup history and mail log (BASH)

A simple yet reliable script to automate your PostgreSQL Database backups

Introduction

In the world of database management, regular backups are crucial for data integrity and disaster recovery. For PostgreSQL users on Linux systems, having a reliable, automated backup solution is essential. This comprehensive guide will walk you through creating a powerful bash script to automate PostgreSQL backups, complete with compression, verification, and email notifications.

Whether you're a seasoned system administrator or a developer looking to enhance your database management skills, this tutorial will provide you with the tools and knowledge to implement a robust backup strategy for your PostgreSQL databases.

Understanding the PostgreSQL Backup Script

Our script is designed to perform the following tasks:

- Backup all non-template PostgreSQL databases

- Compress backup files to save storage space

- Verify the integrity of both raw and compressed backups

- Implement a retention policy to manage backup storage

- Provide detailed email notifications about the backup process

Let's dive into the key components of this script and understand how they work together to create a comprehensive backup solution.

Script Overview and Configuration

The script begins by setting up crucial configuration variables:

# Configuration

BACKUP_ROOT="/backup_postgresql"

DAYS_TO_KEEP=30 # Adjust this value to change retention period

MIN_DUMP_SIZE=1024 # Minimum expected size of a dump file in bytes

# Utilities

PSQL=$(which psql)

PG_DUMP=$(which pg_dump)

PG_RESTORE=$(which pg_restore)

GZIP=$(which gzip)

# E-Mail settings

MAIL_SENDER="serveradmin"

MAIL_SUBJECT="PostgreSQL backup on myserver.mydomain.tld"

MAIL_RECIPIENTS="server-admin@mydomain.tld"

MAIL_AGENT=$(which mail)

These settings allow you to customize the script to your specific environment, including backup location, retention period, and email notifications.

Key Features of the Backup Script

1. Database Discovery and Backup

The script automatically discovers all non-template databases in your PostgreSQL instance:

DATABASES=$(sudo -u postgres $PSQL -t -c "SELECT datname FROM pg_database WHERE datistemplate = false AND datname != 'postgres';")

It then iterates through each database, creating individual backup files:

for DB in $DATABASES; do

RAW_BACKUP_FILE="${BACKUP_DIR}/${DB}.dump"

GZIP_BACKUP_FILE="${RAW_BACKUP_FILE}.gz"

TEMP_GZIP_FILE="${GZIP_BACKUP_FILE}.tmp"

# Dump the database

sudo -u postgres $PG_DUMP -v -Fc $DB -f "$RAW_BACKUP_FILE" >/dev/null 2>&1

# ... (compression and verification steps)

done

2. Backup Compression

To optimize storage usage, the script compresses each backup using gzip:

$GZIP -c "$RAW_BACKUP_FILE" > "$TEMP_GZIP_FILE"

3. Backup Verification

One of the script's standout features is its robust verification process. It checks both the raw dump and the compressed file:

verify_raw_dump() {

local dump_file=$1

# Check file size

local file_size=$(stat -c%s "$dump_file")

if [ "$file_size" -lt "$MIN_DUMP_SIZE" ]; then

return 1

fi

# Test dump with pg_restore --list (safe, read-only operation)

if ! $PG_RESTORE --list "$dump_file" >/dev/null 2>&1; then

return 1

fi

return 0

}

verify_gzip() {

local gzip_file=$1

# Test gzip file integrity

if ! $GZIP -t "$gzip_file" >/dev/null 2>&1; then

return 1

fi

return 0

}

4. Retention Policy

To manage disk space, the script implements a retention policy, deleting backups older than a specified number of days:

delete_old_backups() {

local deleted_count=0

while IFS= read -r -d '' dir; do

if [ -d "$dir" ]; then

rm -rf "$dir"

((deleted_count++))

fi

done < <(find "$BACKUP_ROOT" -maxdepth 1 -type d -mtime +$DAYS_TO_KEEP -print0)

MAIL_BODY="${MAIL_BODY}\nDeleted $deleted_count backup(s) older than $DAYS_TO_KEEP days.\n"

}

5. Error Handling and Reporting

The script includes comprehensive error handling, capturing and reporting any issues that occur during the backup process:

if [ $DUMP_EXITCODE -ne 0 ] ; then

ERRORS=1

ERRORMESSAGES="$ERRORMESSAGES\n- $DB (Dump Error code: $DUMP_EXITCODE)"

rm -f "$RAW_BACKUP_FILE" # Remove failed dump file

else

# ... (verification and compression steps)

fi

6. Email Notifications

Upon completion, the script sends a detailed email report:

sudo -u ${MAIL_SENDER} echo -e "${MAIL_BODY}" | sudo -u ${MAIL_SENDER} ${MAIL_AGENT} -s "${MAIL_SUBJECT}" ${MAIL_RECIPIENTS}

Implementing the Backup Script

Full backup script code

#!/bin/bash

# Configuration

BACKUP_ROOT="/backup_postgresql"

DAYS_TO_KEEP=30 # Adjust this value to change retention period

MIN_DUMP_SIZE=1024 # Minimum expected size of a dump file in bytes

# Utilities

PSQL=$(which psql)

PG_DUMP=$(which pg_dump)

PG_RESTORE=$(which pg_restore)

GZIP=$(which gzip)

# E-Mail settings

MAIL_SENDER="serveradmin"

MAIL_SUBJECT="PostgreSQL backup on myserver.mydomain.tld"

MAIL_RECIPIENTS="server-admin@mydomain.tld"

MAIL_AGENT=$(which mail)

TIME_START=$(date +"%s")

ERRORS=0

MAIL_BODY="Start of backup on $(date +%d.%B.%Y,%T).\n\n"

ERRORMESSAGES="Error(s) occurred backing up the following database(s):"

# Function to delete old backups

delete_old_backups() {

local deleted_count=0

while IFS= read -r -d '' dir; do

if [ -d "$dir" ]; then

rm -rf "$dir"

((deleted_count++))

fi

done < <(find "$BACKUP_ROOT" -maxdepth 1 -type d -mtime +$DAYS_TO_KEEP -print0)

MAIL_BODY="${MAIL_BODY}\nDeleted $deleted_count backup(s) older than $DAYS_TO_KEEP days.\n"

}

# Function to verify raw dump

verify_raw_dump() {

local dump_file=$1

# Check file size

local file_size=$(stat -c%s "$dump_file")

if [ "$file_size" -lt "$MIN_DUMP_SIZE" ]; then

return 1

fi

# Test dump with pg_restore --list (safe, read-only operation)

if ! $PG_RESTORE --list "$dump_file" >/dev/null 2>&1; then

return 1

fi

return 0

}

# Function to verify gzipped file

verify_gzip() {

local gzip_file=$1

# Test gzip file integrity

if ! $GZIP -t "$gzip_file" >/dev/null 2>&1; then

return 1

fi

return 0

}

# Function to format file size

format_size() {

local size=$1

printf "%.3f MB" $(echo "scale=3; $size / 1048576" | bc)

}

# Function to clean up temporary files

cleanup_temp_files() {

local backup_dir=$1

find "$backup_dir" -type f -name "*.dump" -delete

find "$backup_dir" -type f -name "*.tmp" -delete

}

# Get list of databases

DATABASES=$(sudo -u postgres $PSQL -t -c "SELECT datname FROM pg_database WHERE datistemplate = false AND datname != 'postgres';")

# Create backup directory if it doesn't exist

sudo -u postgres mkdir -p $BACKUP_ROOT

# Create a subdirectory with current date and time

BACKUP_DIR="${BACKUP_ROOT}/$(date +%Y-%m-%d-%H%M)"

sudo -u postgres mkdir -p $BACKUP_DIR

for DB in $DATABASES; do

RAW_BACKUP_FILE="${BACKUP_DIR}/${DB}.dump"

GZIP_BACKUP_FILE="${RAW_BACKUP_FILE}.gz"

TEMP_GZIP_FILE="${GZIP_BACKUP_FILE}.tmp"

# Dump the database

sudo -u postgres $PG_DUMP -v -Fc $DB -f "$RAW_BACKUP_FILE" >/dev/null 2>&1

DUMP_EXITCODE=$?

if [ $DUMP_EXITCODE -ne 0 ] ; then

ERRORS=1

ERRORMESSAGES="$ERRORMESSAGES\n- $DB (Dump Error code: $DUMP_EXITCODE)"

rm -f "$RAW_BACKUP_FILE" # Remove failed dump file

else

# Verify raw dump

if verify_raw_dump "$RAW_BACKUP_FILE"; then

RAW_SIZE=$(stat -c%s "$RAW_BACKUP_FILE")

# Compress the dump to a temporary file

$GZIP -c "$RAW_BACKUP_FILE" > "$TEMP_GZIP_FILE"

GZIP_EXITCODE=$?

if [ $GZIP_EXITCODE -ne 0 ] ; then

ERRORS=1

ERRORMESSAGES="$ERRORMESSAGES\n- $DB (Compression Error code: $GZIP_EXITCODE)"

rm -f "$RAW_BACKUP_FILE" "$TEMP_GZIP_FILE" # Remove both raw and failed gzip files

else

# Verify gzipped file

if verify_gzip "$TEMP_GZIP_FILE"; then

mv "$TEMP_GZIP_FILE" "$GZIP_BACKUP_FILE" # Rename temp file to final gzip file

GZIP_SIZE=$(stat -c%s "$GZIP_BACKUP_FILE")

MAIL_BODY="${MAIL_BODY}Successfully backed up and compressed $DB\n"

MAIL_BODY="${MAIL_BODY} Path: $GZIP_BACKUP_FILE\n"

MAIL_BODY="${MAIL_BODY} Size: $(format_size $RAW_SIZE) (uncompressed) / $(format_size $GZIP_SIZE) (compressed)\n\n"

rm -f "$RAW_BACKUP_FILE" # Remove raw dump file after successful compression

else

ERRORS=1

ERRORMESSAGES="$ERRORMESSAGES\n- $DB (Gzip verification failed)"

rm -f "$RAW_BACKUP_FILE" "$TEMP_GZIP_FILE" # Remove both raw and failed gzip files

fi

fi

else

ERRORS=1

ERRORMESSAGES="$ERRORMESSAGES\n- $DB (Raw dump verification failed)"

rm -f "$RAW_BACKUP_FILE" # Remove failed dump file

fi

fi

done

# Final cleanup of any remaining temporary files

cleanup_temp_files "$BACKUP_DIR"

if [ $ERRORS -eq 0 ] ; then

MAIL_BODY="${MAIL_BODY}\nAll databases were successfully backed up, verified, and compressed."

MAIL_SUBJECT="${MAIL_SUBJECT} [SUCCESS]"

# Delete old backups only if the current backup was successful

delete_old_backups

else

MAIL_BODY="${MAIL_BODY}\n$ERRORMESSAGES"

MAIL_SUBJECT="${MAIL_SUBJECT} [!!!--- ERROR ---!!!]"

fi

TIME_DIFF=$(($(date +"%s")-${TIME_START}))

MAIL_BODY="${MAIL_BODY}\n\nEnd of backup on $(date +%d.%B.%Y,%T)."

MAIL_BODY="${MAIL_BODY}\nRuntime: $((${TIME_DIFF} / 60)) minutes and $((${TIME_DIFF} % 60)) seconds."

# Send status e-mail

sudo -u ${MAIL_SENDER} echo -e "${MAIL_BODY}" | sudo -u ${MAIL_SENDER} ${MAIL_AGENT} -s "${MAIL_SUBJECT}" ${MAIL_RECIPIENTS}

How to use the script

To use this script in your environment, follow these steps:

- Copy the script to a suitable location on your Linux server, e.g.,

/usr/local/bin/pg_backup.sh - Make the script executable:

chmod +x /usr/local/bin/pg_backup.sh - Edit the configuration variables at the beginning of the script to match your environment.

- Set up a cron job to run the script regularly, e.g., daily at 2 AM:

0 2 * * * /usr/local/bin/pg_backup.sh

Dependencies and Troubleshooting

This script relies on several standard Linux and PostgreSQL utilities:

psql: PostgreSQL interactive terminalpg_dump: PostgreSQL database backup toolpg_restore: PostgreSQL database restore toolgzip: Compression utilitymail: Command-line email client

Ensure these utilities are installed and accessible in your system's PATH. If you encounter any "command not found" errors, you may need to install the corresponding package or adjust your PATH.

Comparison with Other Backup Solutions

Let's compare this script with other popular PostgreSQL backup solutions:

| Feature | This Script | pg_basebackup | Barman |

|---|---|---|---|

| Logical Backup | Yes | No | Yes |

| Physical Backup | No | Yes | Yes |

| Compression | Yes | Yes | Yes |

| Backup Verification | Yes | No | Yes |

| Retention Policy | Yes | No | Yes |

| Email Notifications | Yes | No | Yes |

| Ease of Customization | High | Low | Medium |

While this script excels in customizability and features a comprehensive set of functionalities, it's important to note that tools like Barman offer additional features such as incremental backups and point-in-time recovery. The choice between these solutions depends on your specific requirements and infrastructure complexity.

Conclusion

This PostgreSQL backup script provides a robust, customizable solution for automating database backups on Linux systems. By implementing compression, verification, and a retention policy, it ensures that your data is safely backed up and easily recoverable when needed. The added benefit of email notifications keeps you informed about the backup process, allowing for quick intervention if any issues arise.

Remember to regularly test your backups by performing mock restores to ensure they're working as expected. With this script in place, you can rest easier knowing that your PostgreSQL databases are protected with a reliable backup strategy.

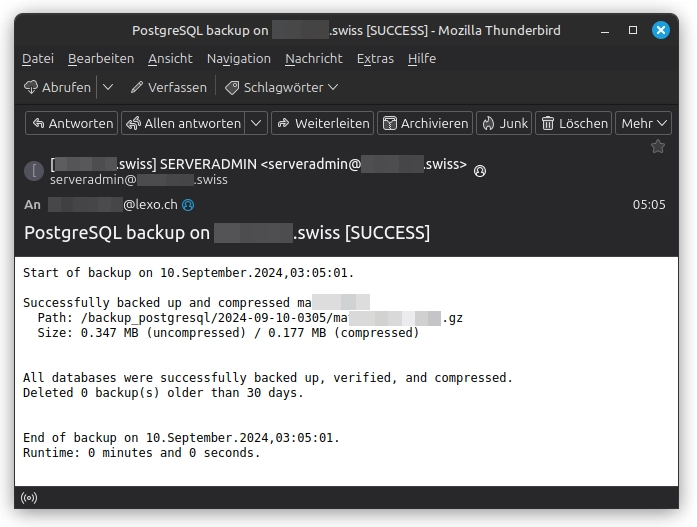

Sample email notification

Further Improvements and Considerations

While this script provides a solid foundation for PostgreSQL backups, there are several ways you could enhance it further:

- Implement parallel backups for faster processing of multiple databases.

- Add support for incremental backups to reduce backup time and storage requirements.

- Integrate with cloud storage solutions for off-site backup storage.

- Implement a restore function to simplify the recovery process.

- Add a more detailed/extended logging functionality to maintain a detailed record of backup operations.

- Additionally/optionally implement encryption for sensitive data protection.

Best Practices for PostgreSQL Backup Management

To ensure the effectiveness of your backup strategy, consider the following best practices:

- Regular Testing: Periodically test your backups by performing restore operations. This ensures that your backups are not only being created but are also usable when needed.

- Off-site Storage: Store copies of your backups in a different physical location or cloud storage to protect against local disasters. You could use other backup mechanisms to offload your data to other storage volumes such as:

- Monitoring: Set up monitoring for your backup process to quickly identify and address any issues.

- Documentation: Maintain clear documentation of your backup and restore procedures for quick reference during emergencies.

- Security: Ensure that your backup files are properly secured, with restricted access and, ideally, encryption for sensitive data.

Adapting the Script for Different Environments

While this script is designed for Linux environments, you may need to adapt it for different operating systems or specific PostgreSQL configurations. Here are some considerations:

Windows Environments

It is not recommended to use this script on a Windows system.

Cloud-based PostgreSQL Services

This script is not designed to work with a cloud-based PostgreSQL service (e.g., Amazon RDS, Google Cloud SQL).

High-Volume Databases

For databases with high transaction volumes, consider implementing a more sophisticated backup strategy, such as:

- Continuous archiving and point-in-time recovery (PITR)

- Streaming replication with delayed standby servers for quick recovery

- Incremental backups to reduce backup time and storage requirements

This script is designed for simple server applications where you want to create easy-to-recover full database backups.

Troubleshooting Common Issues

When implementing this backup script, you might encounter some common issues. Here's how to address them:

1. Permission Errors

ERROR: permission denied for database "mydb"

Solution: Ensure that the user running the script (typically 'postgres') has the necessary permissions to access all databases.

2. Disk Space Issues

No space left on device

Solution: Monitor your backup directory's disk space usage and adjust the retention policy as needed. Consider implementing disk space checks before starting the backup process.

3. Network Timeouts

could not connect to server: Connection timed out

Solution: If backing up remote databases, ensure stable network connectivity and consider implementing retry logic for network operations.

Integrating with Monitoring Systems

To enhance the reliability of your backup process, consider integrating this script with monitoring systems like Nagios, Zabbix, or Prometheus. You can modify the script to output status information in a format these systems can interpret, allowing for real-time monitoring of your backup jobs.

# Example: Output for Nagios

if [ $ERRORS -eq 0 ]; then

echo "OK - PostgreSQL backup completed successfully"

exit 0

else

echo "CRITICAL - PostgreSQL backup failed"

exit 2

fi

Conclusion: Ensuring Data Safety with Automated PostgreSQL Backups

Implementing an automated PostgreSQL backup solution is a critical step in safeguarding your data and ensuring business continuity. The script we've explored in this guide provides a robust foundation for creating reliable, compressed, and verified backups of your PostgreSQL databases.

By automating the backup process, implementing verification steps, and setting up email notifications, you can significantly reduce the risk of data loss and minimize downtime in case of system failures. Remember that while this script offers a comprehensive solution, it's essential to regularly review and update your backup strategy to keep pace with your evolving data management needs.

As you implement this backup solution, consider the specific requirements of your environment, potential scalability needs, and any compliance regulations you need to adhere to. With careful planning and regular maintenance, you can ensure that your PostgreSQL data remains secure and recoverable, providing peace of mind for you and your stakeholders.

We encourage you to adapt this script to your needs, contribute improvements to the community, and always stay proactive in your approach to data protection. After all, in the world of database management, a solid backup strategy is not just a best practice—it's an absolute necessity.