Categories

Money money money...

Could we help you? Please click the banners. We are young and desperately need the money

Last updated: November 18th 2024

Categories: Linux

Author: Marcus Fleuti

Automating 404 Error Detection: A Bash Script for Efficient Google Search Console URL Validation and .htaccess Rule Generation

INTRODUCTION

Maintaining a healthy website means keeping track of and fixing broken links. While Google Search Console is excellent at identifying potential URL errors, validating these URLs and implementing fixes can be time-consuming. This article introduces a powerful Bash script that automates the process of validating URLs from Google Search Console and generating appropriate .htaccess redirect rules.

Why You Need This Script

If you've ever dealt with Google Search Console's URL inspection reports, you know the challenges:

- Large numbers of reported 404 errors, many of which might actually be working URLs

- Time-consuming manual URL validation

- Need to create redirect rules for genuinely broken URLs

- Difficulty in handling large URL lists efficiently

This script solves these problems by automating the entire process, from URL validation to .htaccess rule generation.

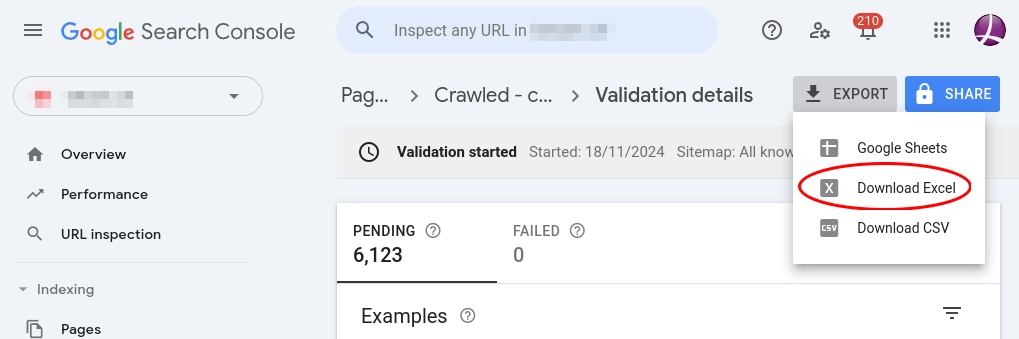

Getting Started with URL Export from Google Search Console

Before running the script, you'll need to export your URL list from Google Search Console:

- Log into Google Search Console

- Navigate to Coverage or Index Status reports

- Look for pages with 404 errors

- Export the list of URLs to a spreadsheet

How the Script Works

The script performs several key functions:

- Reads a list of URLs from a text file

- Validates each URL using curl commands

- Identifies genuine 404 errors (and other error codes)

- Automatically generates .htaccess redirect rules

- Creates detailed reports of found issues

Key Features

- Automatic URL validation with status code checking

- Special handling of 410 (Gone) status codes

- Generation of 301 redirect rules for .htaccess

- Configurable target URL for redirects

- Support for UTM parameters in redirect targets

- Colorized output for better readability

Script Configuration and Usage

The script provides comprehensive configuration options that can be tailored to your specific requirements. Let's explore each configuration section in detail:

Basic Configuration

# Main Configuration Option

CREATE_HTACCESS_OUTPUT=trueThis is the primary setting that controls the script's operation mode:

- true: Generates .htaccess rewrite rules for redirecting error URLs

- false: Only displays the original URLs without creating redirect rules

Redirect Configuration

# .htaccess Target Configuration

HTACCESS_TARGET_URL="https://www.example.com/de/"

HTACCESS_UTM_PARAMS="utm_campaign=20241118&utm_source=404-redirect&utm_medium=web"

HTACCESS_FLAGS="R=301,NC,L,NE"These settings control how redirects are generated:

Target URL Configuration

- HTACCESS_TARGET_URL: The destination URL for all redirects

- Example for homepage redirect:

"https://www.example.com/" - Example for language-specific redirect:

"https://www.example.com/en/" - Example for category redirect:

"https://www.example.com/products/"

- Example for homepage redirect:

UTM Parameter Configuration

- HTACCESS_UTM_PARAMS: Analytics tracking parameters

utm_campaign: Identify the redirect campaignutm_source: Track the traffic sourceutm_medium: Specify the marketing medium

- Set to empty string (

"") to disable UTM parameters

Rewrite Rule Configuration

- HTACCESS_FLAGS: Controls the behavior of redirect rules

R=301: Permanent redirect (best for SEO)NC: Case-insensitive matchingL: Last rule - stop processing other rulesNE: No escaping of special characters

File Path Configuration

# File Path Configurations

URL_LIST="/path/to/404-url-src.txt"

OUTPUT_FILE="/path/to/error-urls.txt"

HTACCESS_OUTPUT_FILE="/path/to/generated-htaccess-rules.txt"Configure the input and output file locations:

- URL_LIST: Path to your input file containing URLs from Google Search Console

- Format: One URL per line

- Example content:

https://example.com/old-page https://example.com/deleted-product https://example.com/outdated-category

- OUTPUT_FILE: Where to save the list of discovered error URLs

- HTACCESS_OUTPUT_FILE: Where to save the generated .htaccess rules

Optional Advanced Settings

# Additional Configuration

HTACCESS_ESCAPE_DOTS=true # Escape dots in URL paths for regex

Additional settings for fine-tuning the script's behavior:

- HTACCESS_ESCAPE_DOTS:

- When true: Escapes dots in URLs (e.g., .html becomes \.html)

- Helps prevent regex pattern matching issues

Example Output

When the script runs successfully, it generates .htaccess rules like this:

RewriteRule ^old-page$ https://www.example.com/de/?utm_campaign=20241118&utm_source=404-redirect&utm_medium=web [R=301,NC,L,NE]

RewriteRule ^deleted-product$ https://www.example.com/de/?utm_campaign=20241118&utm_source=404-redirect&utm_medium=web [R=301,NC,L,NE]

RewriteRule ^outdated-category$ https://www.example.com/de/?utm_campaign=20241118&utm_source=404-redirect&utm_medium=web [R=301,NC,L,NE]Running the Script

After configuring the script, you can run it using:

chmod +x script-name.sh

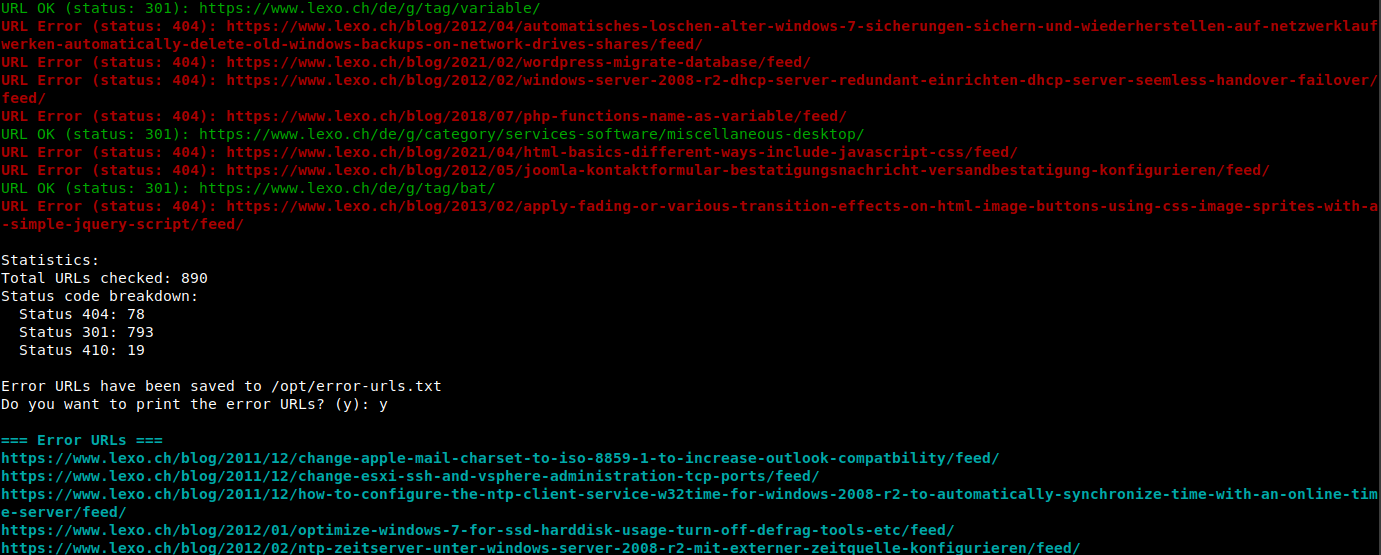

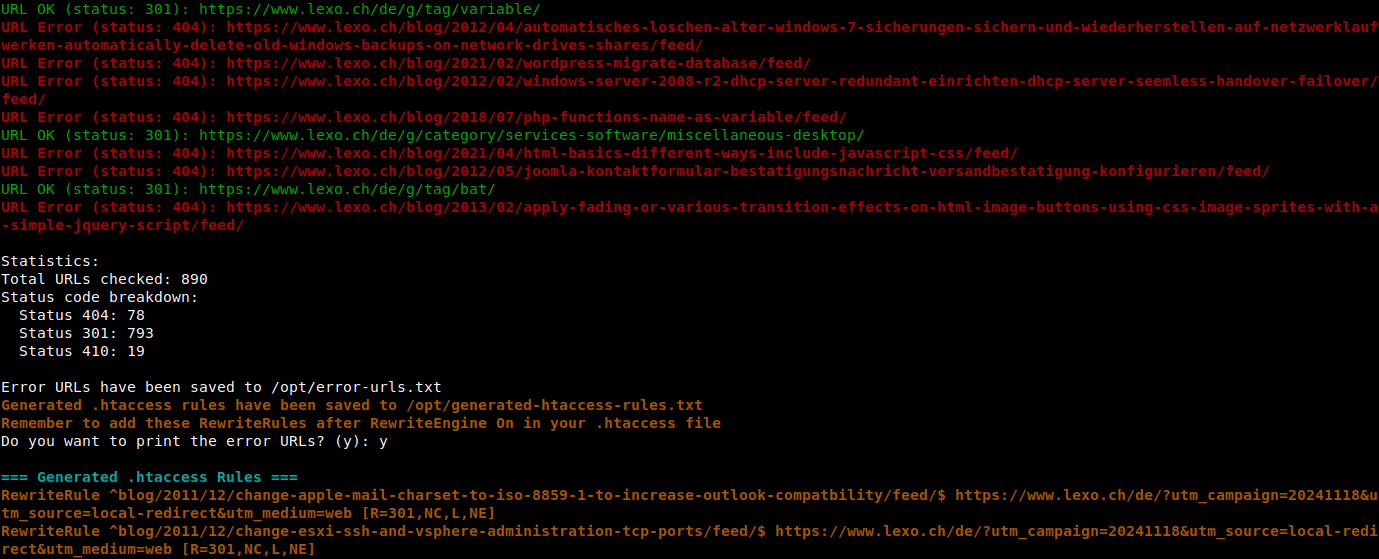

./script-name.shThe script will provide real-time feedback about each URL it checks and generate a summary at the end:

Statistics:

Total URLs checked: 890

Status code breakdown:

Status 301: 871

Status 404: 19

Error URLs have been saved to /opt/error-urls.txt

Generated .htaccess rules have been saved to /opt/generated-htaccess-rules.txtCommon URL Patterns That Cause 404 Errors

Through extensive analysis, we've identified several common patterns that frequently lead to 404 errors:

| Pattern | Example | Common Cause |

|---|---|---|

| Old Blog Posts | /blog/2020/old-post-title | Content restructuring without redirects |

| Changed Product URLs | /products/discontinued-item | Product catalog updates |

| Case Sensitivity | /Blog/ vs /blog/ | Inconsistent URL casing |

| Missing File Extensions | /page vs /page.html | Server configuration changes |

Simple output of faulty URLs

(CREATE_HTACCESS_OUTPUT=false)

.htaccess RewriteRule output

(CREATE_HTACCESS_OUTPUT=true)

Comparison with Other Solutions

| Feature | This Script | Manual Checking | Commercial Tools |

|---|---|---|---|

| Automated Validation | Yes | No | Yes |

| .htaccess Generation | Yes | No | Limited |

| Cost | Free | Free | Paid |

| Customizable | Yes | N/A | Limited |

Download the script

#!/bin/bash

# Main Configuration Option

# -----------------------

# This setting determines the output format:

# When true: Generates and displays .htaccess rewrite rules

# When false: Displays original URLs without modifications

CREATE_HTACCESS_OUTPUT=true

# The following options are only used when CREATE_HTACCESS_OUTPUT=true

# ----------------------------------------------------------------

# .htaccess URL Processing

# -----------------------

# When true, escapes dots in URL paths (e.g., .html becomes \.html) for .htaccess rules.

# This prevents dots from being interpreted as regex wildcards.

# Example: /path/to/page.html -> /path/to/page\.html

HTACCESS_ESCAPE_DOTS=false

# .htaccess Target Configuration

# ----------------------------

# Target URL for all redirects

HTACCESS_TARGET_URL="https://www.example.com/de/"

# UTM Parameters for target URL (optional)

# Set to empty string to disable UTM parameters

HTACCESS_UTM_PARAMS="utm_campaign=20241118&utm_source=local-redirect&utm_medium=web"

# Rewrite rule flags

HTACCESS_FLAGS="R=301,NC,L,NE"

# File Path Configurations

# ----------------------

# These settings are used regardless of CREATE_HTACCESS_OUTPUT setting

# Path to the file containing the list of URLs

URL_LIST="/opt/404-url-src.txt"

# Path to the file where error URLs will be saved

OUTPUT_FILE="/opt/error-urls.txt"

# Path to the file where .htaccess rules will be saved

# Note: Only used when CREATE_HTACCESS_OUTPUT=true

HTACCESS_OUTPUT_FILE="/opt/generated-htaccess-rules.txt"

# ANSI Color Definitions

# ---------------------

RED='\033[1;31m'

GREEN='\033[0;32m'

BLUE='\033[1;36m'

YELLOW='\033[1;33m'

ORANGE='\033[0;33m'

NC='\033[0m' # No Color

# Validation

# ---------

if [[ "$CREATE_HTACCESS_OUTPUT" = true && -z "$HTACCESS_TARGET_URL" ]]; then

echo -e "${RED}Error: HTACCESS_TARGET_URL must be set when CREATE_HTACCESS_OUTPUT is true${NC}"

exit 1

fi

# Check if the URL list file exists

if [[ ! -f "$URL_LIST" ]]; then

echo "File not found: $URL_LIST"

exit 1

fi

# Remove output files if they already exist

[[ -f "$OUTPUT_FILE" ]] && rm "$OUTPUT_FILE"

[[ -f "$HTACCESS_OUTPUT_FILE" ]] && rm "$HTACCESS_OUTPUT_FILE"

# Initialize counters and associative arrays

total_urls=0

declare -A status_counts

declare -A domain_urls

declare -A domain_paths

declare -A processed_domains

# Add a default key for empty domain

processed_domains["_empty_"]=""

domain_urls["_empty_"]=""

# Function Definitions

# ------------------

# Function to extract domain from URL

get_domain() {

local url=$1

if [[ "$CREATE_HTACCESS_OUTPUT" = true ]]; then

echo ""

else

# Extract protocol and domain (including subdomain)

echo "$url" | sed -E 's|^(https?://[^/]+)/.*|\1/|'

fi

}

# Function to extract path from URL

get_path() {

local url=$1

if [[ "$CREATE_HTACCESS_OUTPUT" = true ]]; then

# If generating htaccess rules, return everything after the domain portion

echo "$url" | sed -E 's|^https?://[^/]+(/.*$)|\1|'

else

local domain=$(get_domain "$url")

# Remove domain from URL to get path

echo "${url#$domain}"

fi

}

# Function to handle spaces in URL

fix_spaces() {

local url="$1"

# Replace spaces with %20

echo "$url" | sed 's/ /%20/g'

}

# Function to clean and format path for regex

format_path() {

local path="$1"

# Remove URL parameters (everything after ?)

path="${path%%\?*}"

if [[ "$HTACCESS_ESCAPE_DOTS" = true ]]; then

# Escape all dots in the path if option is enabled

path=$(echo "$path" | sed 's/\./\\./g')

fi

echo "$path"

}

# Function to generate .htaccess rewrite rule

generate_rewrite_rule() {

local path="$1"

local formatted_path=$(format_path "$path")

# Remove leading slash for RewriteRule

formatted_path="${formatted_path#/}"

# Build target URL with UTM parameters if provided

local target="$HTACCESS_TARGET_URL"

if [[ -n "$HTACCESS_UTM_PARAMS" ]]; then

target="${target}?${HTACCESS_UTM_PARAMS}"

fi

# Add $ at the end of the path to ensure exact matching

echo "RewriteRule ^${formatted_path}$ ${target} [${HTACCESS_FLAGS}]"

}

#!/bin/bash

# [Previous configuration sections remain the same until the main processing loop]

# Main Processing Loop

# ------------------

while IFS= read -r url; do

# Skip empty lines and trim whitespace

url=$(echo "$url" | xargs)

[[ -z "$url" ]] && continue

# Increment total URL count

((total_urls++))

# Fix spaces in URL

fixed_url=$(fix_spaces "$url")

# Use curl to check URL status

status_code=$(curl --user-agent "Mozilla/5.0 (Windows NT 10.0; Win64; x64) Firefox/123.0" \

--head \

--connect-timeout 10 \

-o /dev/null \

-s \

-w "%{http_code}\n" \

"$fixed_url")

# Update status code count

status_counts[$status_code]=$((${status_counts[$status_code]:-0} + 1))

# Extract domain and path

domain=$(get_domain "$fixed_url")

path=$(get_path "$fixed_url")

# Use _empty_ key for CREATE_HTACCESS_OUTPUT mode

if [[ "$CREATE_HTACCESS_OUTPUT" = true ]]; then

domain="_empty_"

fi

# Initialize array for domain if not exists

if [ -z "${processed_domains[$domain]:-}" ]; then

domain_urls[$domain]=""

processed_domains[$domain]="1"

fi

# Check the status code and process accordingly

if [[ "$status_code" -eq 410 ]]; then

# Special handling for 410 Gone status

echo -e "${ORANGE}URL SKIPPED (status: 410): $fixed_url${NC}"

elif [[ "$status_code" -ge 400 && "$status_code" -lt 500 ]]; then

echo -e "${RED}URL Error (status: $status_code): $fixed_url${NC}"

# Append path to domain's URL list

domain_urls[$domain]+="$path"$'\n'

echo "$fixed_url" >> "$OUTPUT_FILE"

# Generate .htaccess rule if enabled

if [[ "$CREATE_HTACCESS_OUTPUT" = true ]]; then

generate_rewrite_rule "$path" >> "$HTACCESS_OUTPUT_FILE"

fi

elif [[ "$status_code" -ge 200 && "$status_code" -lt 400 ]]; then

echo -e "${GREEN}URL OK (status: $status_code): $fixed_url${NC}"

else

echo -e "${RED}URL Error (status: $status_code): $fixed_url${NC}"

# Append path to domain's URL list for non-410 errors

domain_urls[$domain]+="$path"$'\n'

echo "$fixed_url" >> "$OUTPUT_FILE"

fi

done < "$URL_LIST"

# Print Results

# ------------

# Print statistics

echo -e "\nStatistics:"

echo "Total URLs checked: $total_urls"

echo "Status code breakdown:"

for status in "${!status_counts[@]}"; do

echo " Status $status: ${status_counts[$status]}"

done

# Count actual errors (excluding 410s)

error_count=0

if [[ -f "$OUTPUT_FILE" ]]; then

error_count=$(wc -l < "$OUTPUT_FILE")

fi

# Check if there are any actual errors (excluding 410s)

if [[ $error_count -gt 0 ]]; then

echo -e "\nError URLs have been saved to $OUTPUT_FILE"

if [[ "$CREATE_HTACCESS_OUTPUT" = true ]]; then

echo -e "${YELLOW}Generated .htaccess rules have been saved to $HTACCESS_OUTPUT_FILE${NC}"

echo -e "${YELLOW}Remember to add these RewriteRules after RewriteEngine On in your .htaccess file${NC}"

fi

read -p "Do you want to print the error URLs? (y): " print_urls

if [[ "$print_urls" == "y" ]]; then

if [[ "$CREATE_HTACCESS_OUTPUT" = true ]]; then

echo -e "\n${BLUE}=== Generated .htaccess Rules ===${NC}"

# Create a temporary array to store and sort the rules

mapfile -t sorted_paths < <(echo -n "${domain_urls[_empty_]}" | sort)

for path in "${sorted_paths[@]}"; do

[[ -z "$path" ]] && continue

echo -e "${YELLOW}$(generate_rewrite_rule "$path")${NC}"

done

else

echo -e "\n${BLUE}=== Error URLs ===${NC}"

sort "$OUTPUT_FILE" | while IFS= read -r url; do

echo -e "${BLUE}$url${NC}"

done

fi

fi

else

# Clean up empty files if they were created

[[ -f "$OUTPUT_FILE" ]] && rm "$OUTPUT_FILE"

[[ -f "$HTACCESS_OUTPUT_FILE" ]] && rm "$HTACCESS_OUTPUT_FILE"

if [[ ${status_counts[410]:-0} -gt 0 ]]; then

echo -e "\n${GREEN}No error URLs found.${NC}"

echo -e "${ORANGE}Note: Found ${status_counts[410]} URLs with status code 410 (Gone) which were skipped.${NC}"

else

echo -e "\n${GREEN}Success! No error URLs found.${NC}"

fi

exit 0

fi

Advanced Usage and Optimization

While the script works great for on-demand URL validation, you can optimize its usage:

- Schedule regular checks using cron jobs

- Customize redirect rules based on your site's structure

- Adjust the user agent string for specific requirements

- Modify timeout settings for slower servers

Understanding Status Codes

While the script's primary purpose is identifying 404 (Not Found) errors, it supports detection of various HTTP status codes. The script treats 410 (Gone) status codes as a special case, excluding them from the error reporting since these typically indicate intentionally removed content.

Conclusion

This script significantly streamlines the process of managing URL errors reported by Google Search Console. By automating URL validation and .htaccess rule generation, it saves valuable time and helps maintain a healthier website. Whether you're managing a small blog or a large corporate website, this tool can be an invaluable addition to your SEO maintenance toolkit.

Getting Started

To start using the script:

- Export your URL list from Google Search Console

- Configure the script with your target redirect URL

- Run the script and review the generated .htaccess rules

- Implement the rules in your website's .htaccess file

Remember to review the generated redirect rules before implementing them on your production server, and always keep a backup of your original .htaccess file.